How to Evaluate the Costs & Benefits of Custodial & Non-Custodial Crypto Bank for Your Business

August 9, 2024

Replicating the Success of Maestro: A 2024 Crypto Auto Trading Bot Development Guide

August 12, 2024Tokenization has taken the world by storm. It’s a technology that is likely to completely revolutionize the management, security, and utilization of medical data in the dynamically changing healthcare environment. Healthcare tokenization offers next-generation management of sensitive patient information through advanced cryptographic techniques coupled with blockchain technology. The blog delves deep into healthcare tokenization, its benefits, and its potential in the future.

What is Healthcare Tokenization?

Health tokenization refers to simply replacing sensitive medical data with tokens in a digital format. Tokens are purely cryptographic representations of the actual data used to manage patient records, work through transactions, and enhance data security. Unlike the earlier traditional ways of data protection, tokenization replaces sensitive information with tokens that have no meaning or value outside the system. It ensures the safety of the actual data that is stored confidentially.

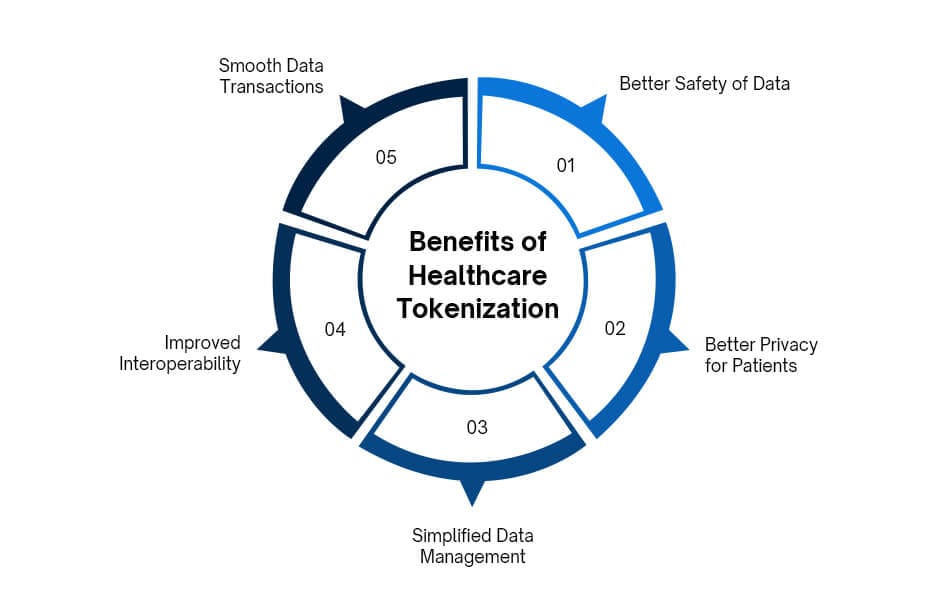

Benefits of Healthcare Tokenization

There are many benefits of healthcare tokenization, some of them are listed below:

Better Safety of Data

The most important advantages of healthcare data tokenization are related to the high level of enhancement of data security. In traditional systems, sensitive information like patient records is kept in databases prone to breaches. Tokenization brings this risk to a minimum by turning sensitive data into tokens that, when intercepted, are meaningless. It means that, in the case of an unauthorized access, no unwanted party could make sense of, or use, the information without the right decryption keys.

Further, tokenization ensures protection against unauthorized access and hacks, thereby reducing the possibilities of identity thefts and frauds to a minimum. Even the tokens themselves are stored in a secure environment; only authorized personnel who have the right decryption keys may have access to the original data. This robust security framework hence meets stringent regulatory standards, such as HIPAA, and provides end-to-end protection to patients’ information.

Better Privacy for Patients

Tokenization has an incredibly important role to play in ensuring patient privacy. Healthcare providers make use of tokens that are encrypted with sensitive medical information so that the patients’ data is protected. Such tokens may grant access to a patient’s record without giving away sensitive information hidden in the token. This approach is instrumental in making good privacy practices and helps in building trust between patients and healthcare providers.

Tokenization allows sharing of patient data among healthcare organizations without loss of confidentiality. For example, in research studies or when sharing data with third parties, tokenized data can be used without breaching patient privacy. This is the ability to secure the patient information and at the same time enable the sharing of data to optimize health operations in security and efficiency.

Simplified Data Management

It’s time-consuming and full of errors when dealing with large volumes of medical data. Tokenization makes this easy because it creates a seamless and efficient manner in which these medical records can be handled and processed. Tokens replace sensitive data in various systems, making it easier to track, manage, and transfer information securely.

Additionally, healthcare operations stand high chances of being highly efficient due to the reduced instances associated with manual data handling and, consequently, minimized errors. The automation processes, including tokens, help in making administrative tasks, such as updating patient records and exchanging data, easier and quicker with no chances of errors. It thus makes the management of data quicker and with higher accuracy.

Improved Interoperability

Healthcare Tokenization Solutions can also enhance interoperability across the different healthcare systems and organizations involved. Traditionally, it is expected that very few disparate systems will communicate or exchange data seamlessly. Tokenization solves this challenge by providing a common framework for the exchange of data.

It facilitates the sharing of patient data by healthcare providers across platforms with enhanced levels of safety and security. Upgraded interoperability would enhance the coordination of care in a manner that made sure health professionals receive comprehensive, current patient information. It will also encourage more complex integration of healthcare data from different sources, hence more harmonic provision of healthcare services to patients.

Smooth Data Transactions

Tokenization in Healthcare can make a world of difference in terms of speed and efficiency of transactions related to protected health information in the healthcare sector. We know that transactions like insurance claims, billing, and sharing of medical records are very complex and time-consuming. Tokens can help tame this administrative burden on healthcare entities by speeding up information exchange.

Tokens make easier and more secure the processing of transactions, making a more simplified methodology of data exchange between organizations. Some of the by-products from this method are faster claim approval, lesser errors, and better operational performance. And for patients, quicker processing times mean there will be reduced delays in receiving these essential services.

Understanding the Mechanics of Healthcare Tokenization

So far, we have covered that Healthcare tokenization is the process of creating digital representatives of real-world assets. That means identification of the asset—medical equipment or patient data, insurance claims—and the development of smart contracts defining the characteristics of tokens to be minted, and finally, minting on a suitable blockchain platform.

Now, let’s understand the mechanics of healthcare tokenization:

Data Conversion: The first step in tokenization involves converting sensitive health data, such as patient records or medical histories, into tokens. These tokens are random strings of characters that hold no intrinsic value or information about the data they represent.

Token Storage: The original sensitive data is securely stored in a separate database, while the tokens are used in everyday transactions or processes. The database containing the actual data is highly protected and accessible only through secure methods.

Access Control: When authorized personnel need to access the actual data, the system uses the token to retrieve the information from the secure database. This ensures that sensitive data is not exposed during transactions or in non-secure environments.

Data Integrity and Privacy: The use of tokens helps protect patient information from unauthorized access and potential breaches. Even if tokens are intercepted, they cannot be used to derive or compromise the original data.

Future Potential of Healthcare Tokenization

Let’s see what the future holds for healthcare tokenization:

Improved Data Security

Adaptive security measures: As the cyber threats would go on getting more sophisticated, tokenization would eventually develop adaptive security features that may even include dynamic tokenization. Tokens would keep changing periodically or contextually according to the user’s behavior, and protection against the evolving threats would enhance.

Integrating Encryption: Such tokenization processes will more and more incorporate advanced encryption techniques for a multi-layered approach towards security of data. For example, the tokens themselves can be encrypted before transmission or storage to provide yet another layer of security.

Regulatory Standards: The tokenization might be required as a minimum standard in the future standards or frameworks of security and protection of the healthcare data. That can then make it an omnipresent tool in the management of health information.

Better Privacy for Patients

Patient-Centric Models: Healthcare tokenization could support patient-centric models where more control is provided to the person. For instance, patients might manage who has access to their information and under what conditions, making sure that their privacy is protected according to their taste.

Granular Access Controls: Next-generation tokenization systems could support very granular access controls. As such, it will enable patients to specify finer access—for example, by information types or for different users—to boost their privacy and control.

Next-Generation Regulations: By evolving privacy regulations, like the GDPR and CCPA, tokenization will be extremely vital in addressing the requirements set out in these new laws. Future regulations could necessitate more sophisticated tokenization practices for compliance.

Simplified sharing of data

Interoperability Standards: In developing and implementing interoperability standards, tokenization can play a big role. It would support seamless data exchange between disparate healthcare systems while keeping the data secure and private.

With Blockchain: A fusion of tokenization with blockchain technology can lead to the formation of decentralized networks meant for secure data sharing. It shall ensure that the sharing of data is tamper-proof and completely transparent. It supports secure interaction between patients, providers, and researchers.

Automated sharing of data: The improvement of tokenization systems may enable the process of sharing automatically in a more secured manner, which will lessen the administrative burden and boost the effectiveness of health care operations.

Personalized Medicine

Secure Management of Genomic Data: The tokenization of genomic data could also mean that information used for personalized treatments can be shared without the fear of exposure. The scenario can therefore underpin the future development of precision medicine, in which researchers and clinicians have access to detailed genetic data for secure analysis.

Value-added data utilization: Tokenization of healthcare data allows pooling of big datasets from different sources and analyzing them to solve a particular problem, all the while protecting individual data privacy. It opens up further opportunities of more precise and faster strategies in personalized medicine.

Consent and Control of Patients: In the future, probably such systems will be in place where patients can provide consent to specific uses of genetic data through tokenized access controls that enhance patient involvement in personalized medicine initiatives.

Advanced Research

Data De-identification: Tokenization will help in de-identification for research data, allowing access to large datasets without divulging information about patients. This may lead to faster discoveries and innovations in medical research.

Secure Collaborative Research: Tokenization would support secure collaborative research efforts whereby different researchers from different institutions would be able to work together on such sensitive data without really exposing them to one another.

Advanced Analytics: It is expected that advanced analytics tools will be used to work on the tokenized data so that it can process the data without exposing the sensitive underlying information, hence enabling advanced research.

Regulatory Compliance

Adaptive Compliance Solution: It may not always be possible for technology to change and evolve as per the regulations, but tokenization technologies are likely to get adapted to new and changing regulatory needs or requirements, which may also include compliance features driven by automation to ensure one is always following the latest data handling practices.

Enhanced Cross-Jurisdictional Compliance: As regulation implementations across the globe become more uniform, tokenization will facilitate better and improved compliance on a cross-jurisdictional level, making operating businesses from single or multi-jurisdictional configurations easier without data protection circumvention.

Audit and Reporting: Next-gen tokenization solutions might come with auditing and reporting capabilities to facilitate organizations in proofs for regulatory compliance and transparency in data operations.

Empowering Patients’ Control with Their Own Data

Personal Data Portals: Tokenization may allow for personal data management portals where patients manage and control their health information. The patient would have the ability to grant or revoke access under their own discretion.

Blockchain Integration: Integrating tokenization with blockchain technology provides a clear, unchangeable record for the history of who accessed data and when, increasing trust and control of personal information.

Customizable Privacy Settings: The future tokenization system would have the feature of custom privacy settings to allow the patients to restrict or liberate the availability of their data for sharing and use by others based on their aspiration.

Integration with Emerging Technologies

AI and ML Integration: Inclusion of artificial intelligence and machine learning in the tokenization systems will strengthen their capabilities in analyzing data. The system thus will not compromise the actual information, while the technologies can detect patterns and insights from the tokenized data.

IoT and Wearables: With the emergence of the Internet of Things devices and wearables, tokenization could easily safeguard data created by those devices. The health information that is obtained from those various sources shall be safeguarded and used appropriately.

Smart Contracts: Tokenization supported with blockchain could maintain smart contracts that automate, track, and enforce data-sharing agreements within live transactions.

Conclusion

Healthcare tokenization can now significantly help secure health data. Tokenization can foster better data security, patient privacy, smooth data management, and interoperability for healthcare organizations. Challenges of compliance, integration with other systems, and technology adoption remain on the horizon, but the potential right now and in the future is significant. Tokenization lies at the heart of the changing landscape of technology and is the forerunner to the transformation toward patient-centered, efficient, and secure healthcare data management.